Now that I'm getting ready to apply for jobs and graduate programs, I've have to come into contact with my transcript more than I would have liked to. Over the past four years, I've had my share of abysmal grades. And I'm not being dramatic, some semesters have been just as atrocious as you can get. When I think back to the courses in which I had received such poor grades, I realize that the classes were based almost solely on exam grades. You do poorly on an exam or two, and you don't really have an opportunity to help yourself. Is that really fair? Grades, after all, are supposed to be indications of how well a student is understanding the course material, not punishments for inadequate or misguided study habits.

I get that "intelligence" or "knowledge of a subject" needs to be operationalized somehow in order to make comparisons. While an exam is a way to numerically gauge how much a student has retained about a subject, it almost never truly displays their understanding of it. When you see a student cramming for an exam - whether it be biochemistry or history - you see them hunched over a notebook full of facts and figures, attempting to jam their head full with as much as possible, with the hope that come exam time, they will be able to regurgitate enough to form cohesive answers.

If exams really did a good job of assessing knowledge of a subject, why do students dread cumulative exams so much? If we had truly gained an understanding of material we were previously tested on, we would prefer a cumulative exam, which would allow us to integrate across material of multiple exams. If professors are going to really use testing to assess a student's understanding of material, they should make exams cumulative, because to me, mastery of a subject requires integration, rather than being able to report on bits and pieces of material.

While standardized testing has been continually lambasted for being an inadequate measure of how prepared a student is for college or graduate schools, there is not much more of an option. However, once you're at school, professors have an entire semester to gauge their students' understanding of the course material. By spreading the grade out over a variety of assignments, some of which are subjectively graded by the professor, not only will students who consider themselves "bad test-takers" feel that they have equal opportunity to do "well" in the class, but there will be much less extrinsic motivation and would most likely spur students' interests in the course material itself. Isn't that what college courses are supposed to be about anyway? Spending four years consistently worried about grading and cramming could potentially be a big precursor for giving students a propensity to dislike fields they would otherwise be interested in.

It would be interesting to perform some kind of meta-analysis comparing students who take classes in which grades are overwhelmingly based off of exam grades and those who receive grades more based off of presentations/papers/integrative assignments. Not only would it be cool to see how GPAs differ, but to see which students pursue careers directly correlated with what they studied in college. How satisfied were they with their collegiate experiences? How do they perform on other, non-academic based, integrative and/or memory tasks? Are there really such people as "bad test-takers"?

November 12, 2010

November 4, 2010

two key aspects to successful comparative psychology research

This semester, I'm taking a seminar on Comparative Psychology, and have found myself to be repeatedly frustrated with an overwhelming majority of the studies we have read. Maybe I'm becoming too opinionated of a reader, but it seems to me that two fundamental things are missing from a lot of the studies. When trying to understand whether or not non-human subjects possess specific psychological abilities (e.g. theory of mind, self-recognition, reciprocal altruism), you must keep two things in mind. First, animals may not perform the behaviors we are looking for without adequate motivation to do so. Secondly, completely novel situations may provoke an animal to react in a way in which they would not customarily react. Therefore, it is important to provide situations that are somewhat familiar to ones they might encounter in their day-to-day lives in their natural, environment to which their species has adapted (unless, of course, using a novel situation removes a bias in the experiment).

(1) Experimenters should provide adequate motivation for the subject to elicit behavior: In an attempt to ascertain whether or not chimps possessed the ability to display the most efficient search technique available using logic (Call & Carpenter, 2001), the experimenters baited one of three tubes with a reward and gave the subjects opportunity to search for it. They called systematic/exhaustive search techniques "inefficient", but without any time or disciplinary motivation, why shouldn't the subjects perform exhaustive searches? As a human, it would be smart to do so. If there were no costs for me to check every option for a reward 2-3 times, why wouldn't I? After all, why should I assume there is only one reward? Maybe if I only had a certain amount of time before my options were taken away, I would be forced to employ a more efficient strategy. Perhaps in this study, after 60 seconds in each trial, the experimenter should have covered the apparatus, thus ending the trial. Eventually, the chimpanzees would realize they had limited time to make their best attempt at finding the food reward, and begin employing more logical/"efficient" strategies. If they did not, then perhaps we could assume they do not have the potential to do so.

(2) Studies should provide situations that are relevant to the natural habitat of the subject species: A study in 2005 (Hattori & Kuroshima) attempted to decipher whether or not Capuchin monkeys possess the ability to cooperate with one another to accomplish a common goal. The results of their study asserted that the monkeys spent a significantly greater amount of time looking at their partner when they needed help on a task. This result conflicted with previous studies (Visalberghi 1997; Visalberghi, et al. 2000) that had failed to provide any evidence of communication during cooperative tasks. The difference here is that the 2005 study used a task that was more intuitive for Capuchin monkeys, whereas the earlier studies used more unfamiliar scenarios. In the earlier studies, the monkeys may have just been more confused about the task in general, and did not fully understand that it required their partner's cooperation. In the end, laboratories are only logistic necessities, and it is more useful to understand whether or not animals can perform in their natural environments.

Basically, in order to conclude that a subject with whom you cannot communicate with does not possess specific mental abilities, you must design an experiment that will do its best to elicit it. Only then can you conclude that the subject does or does not have such capabilities.

(1) Experimenters should provide adequate motivation for the subject to elicit behavior: In an attempt to ascertain whether or not chimps possessed the ability to display the most efficient search technique available using logic (Call & Carpenter, 2001), the experimenters baited one of three tubes with a reward and gave the subjects opportunity to search for it. They called systematic/exhaustive search techniques "inefficient", but without any time or disciplinary motivation, why shouldn't the subjects perform exhaustive searches? As a human, it would be smart to do so. If there were no costs for me to check every option for a reward 2-3 times, why wouldn't I? After all, why should I assume there is only one reward? Maybe if I only had a certain amount of time before my options were taken away, I would be forced to employ a more efficient strategy. Perhaps in this study, after 60 seconds in each trial, the experimenter should have covered the apparatus, thus ending the trial. Eventually, the chimpanzees would realize they had limited time to make their best attempt at finding the food reward, and begin employing more logical/"efficient" strategies. If they did not, then perhaps we could assume they do not have the potential to do so.

(2) Studies should provide situations that are relevant to the natural habitat of the subject species: A study in 2005 (Hattori & Kuroshima) attempted to decipher whether or not Capuchin monkeys possess the ability to cooperate with one another to accomplish a common goal. The results of their study asserted that the monkeys spent a significantly greater amount of time looking at their partner when they needed help on a task. This result conflicted with previous studies (Visalberghi 1997; Visalberghi, et al. 2000) that had failed to provide any evidence of communication during cooperative tasks. The difference here is that the 2005 study used a task that was more intuitive for Capuchin monkeys, whereas the earlier studies used more unfamiliar scenarios. In the earlier studies, the monkeys may have just been more confused about the task in general, and did not fully understand that it required their partner's cooperation. In the end, laboratories are only logistic necessities, and it is more useful to understand whether or not animals can perform in their natural environments.

Basically, in order to conclude that a subject with whom you cannot communicate with does not possess specific mental abilities, you must design an experiment that will do its best to elicit it. Only then can you conclude that the subject does or does not have such capabilities.

October 31, 2010

maybe liberals just can't help themselves...

I'm a pretty firm believer that just about every single aspect of an individual, from their personality/tendencies to appearance and everything in between, is gene-regulated. That being said, I don't think I ever considered the possibility that you're born with a predisposition to be either Conservative or Liberal.

DRD4, the gene that codes for dopamine receptor 4 in humans, has recently been linked to a tendency for individuals to be politically liberal. A medical genetics professor at UC San Diego, in reference to the new finding, asserts that "we hypothesize that individuals with a genetic predisposition toward seeking out new experiences will tend to be more liberal - but only if they had a number of friends growing up." Being more open to new experiences is only one aspect of having a "more liberal personality", if it is at all.

Whether you consider yourself to be a Republican, a Democrat, Libertarian, or even a member of the Rent is Too Damn High Party (see video below), you're only one to a certain degree. Political ideology is on a spectrum, due to the amalgam of platforms and issues. Can we really associate an individual's propensity to try new things with being politically liberal? Are there any personality traits we can really link to voting one way or the other? I personally think political views are one of those things that are mostly influenced by environmental factors (SES, upbringing, friends, etc.) rather than genotype, which could explain why/how an individual's political views can shift with age/environment.

DRD4, the gene that codes for dopamine receptor 4 in humans, has recently been linked to a tendency for individuals to be politically liberal. A medical genetics professor at UC San Diego, in reference to the new finding, asserts that "we hypothesize that individuals with a genetic predisposition toward seeking out new experiences will tend to be more liberal - but only if they had a number of friends growing up." Being more open to new experiences is only one aspect of having a "more liberal personality", if it is at all.

Whether you consider yourself to be a Republican, a Democrat, Libertarian, or even a member of the Rent is Too Damn High Party (see video below), you're only one to a certain degree. Political ideology is on a spectrum, due to the amalgam of platforms and issues. Can we really associate an individual's propensity to try new things with being politically liberal? Are there any personality traits we can really link to voting one way or the other? I personally think political views are one of those things that are mostly influenced by environmental factors (SES, upbringing, friends, etc.) rather than genotype, which could explain why/how an individual's political views can shift with age/environment.

October 26, 2010

when is it no longer "just a fluke"?

This is a screen shot I took from the NHL website a few days ago, when the New York Islanders were leading the Eastern Conference, and League, in points:

The Islanders, who finished 26th (out of 30) in the league last season, and who have been less than impressive for the better part of the decade, seem to be holding their own. Even without their "best" players who were injured in preseason or signing any big time players during the off-season, the Isles are managing points in almost every game. How is this happening?

According to Rangers and Leafs fans, of course it's a fluke. But how many games will the Isles have to win to shut 'em up? In a season of 80 games, will we have to wait for 20 games? 40? Or is it about consistently pulling out W's against the "hardest hittin' teams in the league"? Or will we have to wait and see who scoops up the coveted playoff spots? It's usually a fan's defense mechanism to put down a rival team's success by calling it a "fluke", but how long do I have to wait before I can legitimately remind Rangers fans how much more money they spend per year on "star" players that always seem to disappoint?

In the same token, I think the 5-1 record has finally proven to be enough to stop Giants fans from calling the Jets season a "fluke"? Given the season is only 16 games long in comparison to the NHL's 80...

The Islanders, who finished 26th (out of 30) in the league last season, and who have been less than impressive for the better part of the decade, seem to be holding their own. Even without their "best" players who were injured in preseason or signing any big time players during the off-season, the Isles are managing points in almost every game. How is this happening?

According to Rangers and Leafs fans, of course it's a fluke. But how many games will the Isles have to win to shut 'em up? In a season of 80 games, will we have to wait for 20 games? 40? Or is it about consistently pulling out W's against the "hardest hittin' teams in the league"? Or will we have to wait and see who scoops up the coveted playoff spots? It's usually a fan's defense mechanism to put down a rival team's success by calling it a "fluke", but how long do I have to wait before I can legitimately remind Rangers fans how much more money they spend per year on "star" players that always seem to disappoint?

In the same token, I think the 5-1 record has finally proven to be enough to stop Giants fans from calling the Jets season a "fluke"? Given the season is only 16 games long in comparison to the NHL's 80...

October 23, 2010

a spray of DNA keeps the bad guys away?

Some businesses in Holland have been using a new burglary system - one that sprays synthetic DNA on robbers as they walk out the door. The spray is deployed by an employee without the knowledge of the robber - it's odorless and invisible - and alerts the local police department. The synthetic DNA (which doesn't even cost that much to produce) is specific to the store from which it's deployed, and is meant to help cops link burglars to the scene of the crime. Businesses that have used this system (usually installed by the police departments) have reported declines in crime rate, although there is no current data as of yet.

What could be causing this decline? The synthetic DNA alarm system hasn't been used yet to identify a criminal, but it has been triggered accidentally many times, which would allude to it being used as a scare tactic more than anything else. Businesses that have this system installed are required to have a sign posted outside alerting consumers. Even if it weren't required, it'd be a good move. The appearance of "DNA" on a sign outside of stores definitely deters prospective burglars. I don't see how synthetic DNA spray is any more effective than using UV ink, but fear of the unknown is daunting enough for most people. Even by glancing at readers' comments below the NYTimes article, I'm surprised at how little people actually understand about the use of DNA. Some worry about the prospect of being sprayed by "hybrid-human DNA", without realizing synthetic DNA is completely inactive and would cause no harm to the individual it's been sprayed on. On a more general level, I think criminals tend to associate "DNA" with "getting caught", which is enough to dissuade them.

In this case, the fear of the unknown appears to be effective enough to discourage robbers. It'll be interesting to see raw data pertaining to crime rates though...

What could be causing this decline? The synthetic DNA alarm system hasn't been used yet to identify a criminal, but it has been triggered accidentally many times, which would allude to it being used as a scare tactic more than anything else. Businesses that have this system installed are required to have a sign posted outside alerting consumers. Even if it weren't required, it'd be a good move. The appearance of "DNA" on a sign outside of stores definitely deters prospective burglars. I don't see how synthetic DNA spray is any more effective than using UV ink, but fear of the unknown is daunting enough for most people. Even by glancing at readers' comments below the NYTimes article, I'm surprised at how little people actually understand about the use of DNA. Some worry about the prospect of being sprayed by "hybrid-human DNA", without realizing synthetic DNA is completely inactive and would cause no harm to the individual it's been sprayed on. On a more general level, I think criminals tend to associate "DNA" with "getting caught", which is enough to dissuade them.

In this case, the fear of the unknown appears to be effective enough to discourage robbers. It'll be interesting to see raw data pertaining to crime rates though...

October 20, 2010

overgeneralizing results

I was reading an article for my Neuroscience seminar about how the uncoupling protein UCP2 is required for the exercise-induced changes that occur in the hippocampi of mice (Dietrich, Andrews & Horvath, 2008). In comparison to UCP2ko mice, the researchers found that only wildtype mice showed increases in both oxygen consumption during mitochondrial respiration and in the number of synapses in the CA1 region of the hippocampus. The methods used in this paper were very straight forward and the researchers appeared to control very well for potential biases. It would be very difficult to argue the validity of these results. Therefore I will accept, as Dietrich, Andrews and Harvath conclude, that voluntary exercise in mice increases mitochondrial respiration, mitochondria number, and spine synapse density in the dentate gyrus and the CA1 region of the hippocampus, all of which are dependent on UCP2.

Just when I thought I had finally read a paper for my seminar that hadn't bothered me in some way, I came across this in the conclusion: "Our data illustrate the role of uncoupling proteins in promoting brain plasticity and their participation in physiological and pathological adaptations of the brain." Isn't that statement a tad bit broad for this relatively straight-forward study which really only assessed the importance of UCP2? Furthermore, multiple times throughout the paper, the researchers assert that they did not monitor the effects or levels of UCP3 or UCP5, two other predominant uncoupling proteins. What about UCP1? It has been found to have implications in generating heat in hibernating mammals - how would this come into play in exercise-induced brain function, if at all?

Overgeneralization of results in science can be problematic for the directions of future research. The conclusion of this paper would lead one to believe that all uncoupling proteins have been found to have the same implications on these processes as UCP2 did, when in fact they may not in the least. This concept got me thinking about a trade-off, between overgeneralization and over-specificity, which was perceptively blogged about a few days ago. In Science papers, overgeneralizing can mislead future research, and lead to oversight. On the other hand, being too specific about something could lead to frivolous research, wasting time and resources.

Perhaps for this paper, an appropriate way to conclude this paper would be to assert that UCP2 has been implicated in these mechanisms, but further research is required to determine whether or not all uncoupling proteins produce the same results.

Just when I thought I had finally read a paper for my seminar that hadn't bothered me in some way, I came across this in the conclusion: "Our data illustrate the role of uncoupling proteins in promoting brain plasticity and their participation in physiological and pathological adaptations of the brain." Isn't that statement a tad bit broad for this relatively straight-forward study which really only assessed the importance of UCP2? Furthermore, multiple times throughout the paper, the researchers assert that they did not monitor the effects or levels of UCP3 or UCP5, two other predominant uncoupling proteins. What about UCP1? It has been found to have implications in generating heat in hibernating mammals - how would this come into play in exercise-induced brain function, if at all?

Overgeneralization of results in science can be problematic for the directions of future research. The conclusion of this paper would lead one to believe that all uncoupling proteins have been found to have the same implications on these processes as UCP2 did, when in fact they may not in the least. This concept got me thinking about a trade-off, between overgeneralization and over-specificity, which was perceptively blogged about a few days ago. In Science papers, overgeneralizing can mislead future research, and lead to oversight. On the other hand, being too specific about something could lead to frivolous research, wasting time and resources.

Perhaps for this paper, an appropriate way to conclude this paper would be to assert that UCP2 has been implicated in these mechanisms, but further research is required to determine whether or not all uncoupling proteins produce the same results.

October 11, 2010

mirror-guided body inspection is not an adequate measure of self-awareness in animals

Numerous comparative psychology and biology studies have utilized mirror-guided body inspection to answer the age-old question of whether or not animals possess the ability to self-recognize. Studies have used animals ranging from dolphins (Reiss & Marino, 2001) to primates. While one would think that repeated positioning in front of a mirror after a mark has been visibly put on the subjects would confer that the organism was recognizing oneself. However, I think this method is largely flawed and I find it tough to buy into any hard results researchers deduce from mirror-guided experiments.

First and foremost, the (hopefully) double-blinded observers are forced into subjectively anthropomorphizing the behaviors of subjects. And although I am aware I am being ironically anthropomorphic in my reasoning, animals’ usage of mirrors can be attributed to a variety of justifications.

The mirror itself is a novel object in the animal’s environment, and without a proper baseline assessment, researchers may attribute behavior at a mirror to be due to self-awareness fallaciously. The subjects simply be curious by the introduction of a novel stimulus, and perhaps decreased presence in the mirror as the experiment progresses is attributed to desensitization to the stimulus rather than by the presence of self-recognition. By the same token, animals may not have any reaction to experimental marks if they do not in fact resemble anything that might cause for concern in a normal environment. For example, if the mark on the animal resembles a parasite or blood, the animal could potentially be more interested in pursuing its removal. However, if the animal has seen similar marks on other individuals around him and is no cause for concern, the animal could simply be ignoring its presence. This does not mean that the animal does not possess self-recognition; it is simply not displaying the behaviors we are looking for in our assessment of self-recognition. This potential explanation is of course largely anthropomorphic, and I am implying a first- or even second-order intentionality system to provide an alternate interpretation, but I think it is feasible for primates, especially chimpanzees.

Self-recognition is, in my opinion, largely mental and is close to impossible to assess in organisms who are unable to provide personal accounts. As seen in humans, one can possess a mental state without displaying it, and without a sufficient motive or cause for concern, animals may simply not display the behaviors we are looking for. That being said, mirror-guided body inspection can provide us with some valid information about the possibility of non-human self-recognition – but it’s simply not the whole picture.

October 7, 2010

is memory reorganization during labile states evolutionarily adaptive?

There are two labile states of fear memories. The first occurs shortly after learning, before the consolidation process, during which short-term memories are vulnerable for disruption whereas long-term memories are impervious. After consolidation, with the exposure to the proper environmental cues, such a memory can be retrieved in the brain. Following this retrieval, the second labile stage occurs. During this stage, the aforementioned memory is susceptible to alterations once again. Evidence (Nader, et al. 2000; Kaang, et al. 2009) has illustrated that protein-synthesis dependent reconsolidation processes are required to maintain the original memory. Administering a protein synthesis inhibitor before or immediately after memory retrieval (not quite sure how they elicited the retrieval, or how they measured when exactly it occurred, which would be interesting to know) disrupted the original memory.

Kaang, et al. (2009) hypothesized that these reconstruction processes induced by memory retrieval provide opportunities for memory update or reorganization. Furthermore, they found that new information (stimuli) must be necessary to trigger the destabilization process after memory retrieval. When I first read this, I automatically figured this made sense evolutionarily. Wouldn't we want the opportunity to modify our memories if our environmental stimuli are changing around us? Especially as humans, who live for a much longer time than do rodents, it would be important for our memories to adapt to new technologies and experiences. No question about it. But then as I thought further - I thought about all the downsides this could potentially have.

Kaang, et al. (2009) hypothesized that these reconstruction processes induced by memory retrieval provide opportunities for memory update or reorganization. Furthermore, they found that new information (stimuli) must be necessary to trigger the destabilization process after memory retrieval. When I first read this, I automatically figured this made sense evolutionarily. Wouldn't we want the opportunity to modify our memories if our environmental stimuli are changing around us? Especially as humans, who live for a much longer time than do rodents, it would be important for our memories to adapt to new technologies and experiences. No question about it. But then as I thought further - I thought about all the downsides this could potentially have.

Let's say as a child you develop a taste aversion to dairy products because you have gotten sick from them on multiple occasions. If your memory serves you right, hopefully you have learned to stay away from dairy. But what happens if one day you have dairy by accident and you don't happen to get sick? Does that mean that single experience should remodel the existing memory you already have of dairy-induced discomfort? This example could be applied throughout species - especially since taste aversions are much more imperative to survival of rodents and less complex animals than humans. Anyway...my point being - if memory reorganization occurs each time a long-term memory is retrieved, how drastic does the changed stimulus have to be to alter the existing memory? Does it have to occur more than once? Does it matter how long the memory has been encoded for, or how many times it has previously been retrieved (strength of memory)? It just doesn't seem like it would make evolutionary sense to have all long-term memories susceptible to disruption each time it is retrieved, does it? Would it make more sense to just form new memories in response to new stimuli rather than modify pre-existing ones? Or would that just require more (ugh, don't make me say it) neural plasticity/neurogenesis than our brains are capable of?

It's important to take into consideration that the studies i've read largely deal with fear-conditioned memories. It would be compelling to study and determine whether or not there are differences in the appearance of labile states in other types of memories (e.g. olfactory aversions, conditioned taste aversions, object/social recognition memory, spatial memory). Would it make sense for some of these to be more susceptible to disruptions more or less routinely?

Kaang, et al. (2009) hypothesized that these reconstruction processes induced by memory retrieval provide opportunities for memory update or reorganization. Furthermore, they found that new information (stimuli) must be necessary to trigger the destabilization process after memory retrieval. When I first read this, I automatically figured this made sense evolutionarily. Wouldn't we want the opportunity to modify our memories if our environmental stimuli are changing around us? Especially as humans, who live for a much longer time than do rodents, it would be important for our memories to adapt to new technologies and experiences. No question about it. But then as I thought further - I thought about all the downsides this could potentially have.

Kaang, et al. (2009) hypothesized that these reconstruction processes induced by memory retrieval provide opportunities for memory update or reorganization. Furthermore, they found that new information (stimuli) must be necessary to trigger the destabilization process after memory retrieval. When I first read this, I automatically figured this made sense evolutionarily. Wouldn't we want the opportunity to modify our memories if our environmental stimuli are changing around us? Especially as humans, who live for a much longer time than do rodents, it would be important for our memories to adapt to new technologies and experiences. No question about it. But then as I thought further - I thought about all the downsides this could potentially have.Let's say as a child you develop a taste aversion to dairy products because you have gotten sick from them on multiple occasions. If your memory serves you right, hopefully you have learned to stay away from dairy. But what happens if one day you have dairy by accident and you don't happen to get sick? Does that mean that single experience should remodel the existing memory you already have of dairy-induced discomfort? This example could be applied throughout species - especially since taste aversions are much more imperative to survival of rodents and less complex animals than humans. Anyway...my point being - if memory reorganization occurs each time a long-term memory is retrieved, how drastic does the changed stimulus have to be to alter the existing memory? Does it have to occur more than once? Does it matter how long the memory has been encoded for, or how many times it has previously been retrieved (strength of memory)? It just doesn't seem like it would make evolutionary sense to have all long-term memories susceptible to disruption each time it is retrieved, does it? Would it make more sense to just form new memories in response to new stimuli rather than modify pre-existing ones? Or would that just require more (ugh, don't make me say it) neural plasticity/neurogenesis than our brains are capable of?

It's important to take into consideration that the studies i've read largely deal with fear-conditioned memories. It would be compelling to study and determine whether or not there are differences in the appearance of labile states in other types of memories (e.g. olfactory aversions, conditioned taste aversions, object/social recognition memory, spatial memory). Would it make sense for some of these to be more susceptible to disruptions more or less routinely?

October 6, 2010

"everything's better in moderation" - neural plasticity can't be the exception to that rule.

My neuroscience and behavior seminar, required for all us lucky (or not-so-lucky) neuroscience majors at Vassar, has been molded around one of the most broad and frankly irritating topics of all time: plasticity. Like, I get it. Plasticity's important. New synapses, changes in dendritic spines, pruning - all clearly vital to a functioning brain. And it's definitely necessary for someone studying neuroscience - or any science for that matter - to understand that the brain is capable of changing, even after the so-called "critical period".

Environmental conditions, both intra- and extra-cellularly, have the potential to impact the structure of synapses in the brain. Behaviors can even be re-delegated to new brain regions after an injury (dependent on age and other factors of course). While this all sounds great, there is no way that neural plasticity is always a positive thing. It doesn't make sense that something that can change relatively often would be beneficial to most organisms. Perhaps one can make the argument that as the average life span of a species increases, it could be more favorable (over a century, a lot of environmental stimuli can change dramatically), but often times, plasticity studies are done on mice and rats, both of which do not have a long life span at all. What would be the advantage of neural plasticity after the critical period in development in a mouse that only lives for a few months? Could it possibly be more advantageous for the mouse to have strengthened synapses and stable dendritic branches throughout its lifetime? Is it possible that an organism undergoing neuronal plasticity is actually being diverted from stimuli it should be paying attention to?

It's week 5 of my neuroscience seminar. I have read 17 articles on how neuronal plasticity occurs at different levels of an individual's biology (chromatin remodeling all the way up to cortical neurons being remodeled), and how a thousand different neuronal processes are altered by synaptic plasticity and dendritic changes. After discussing all 17 articles, never has one student questioned the real benefits of these changes occurring in post-developmental subjects. Admittedly, neither have I. I don't know if I can attribute my own hesitation to challenge my Professor's apparent deep affection for neurogenesis and plasticity to my fellow classmates, but there is no way they're not also feeling this frustration. Nothing in science is ever good all the time. In fact, most of the time, eventually we find out that things we think are beneficial turn out to have colossal downsides. So when is it too much when it comes to plasticity?

Environmental conditions, both intra- and extra-cellularly, have the potential to impact the structure of synapses in the brain. Behaviors can even be re-delegated to new brain regions after an injury (dependent on age and other factors of course). While this all sounds great, there is no way that neural plasticity is always a positive thing. It doesn't make sense that something that can change relatively often would be beneficial to most organisms. Perhaps one can make the argument that as the average life span of a species increases, it could be more favorable (over a century, a lot of environmental stimuli can change dramatically), but often times, plasticity studies are done on mice and rats, both of which do not have a long life span at all. What would be the advantage of neural plasticity after the critical period in development in a mouse that only lives for a few months? Could it possibly be more advantageous for the mouse to have strengthened synapses and stable dendritic branches throughout its lifetime? Is it possible that an organism undergoing neuronal plasticity is actually being diverted from stimuli it should be paying attention to?

It's week 5 of my neuroscience seminar. I have read 17 articles on how neuronal plasticity occurs at different levels of an individual's biology (chromatin remodeling all the way up to cortical neurons being remodeled), and how a thousand different neuronal processes are altered by synaptic plasticity and dendritic changes. After discussing all 17 articles, never has one student questioned the real benefits of these changes occurring in post-developmental subjects. Admittedly, neither have I. I don't know if I can attribute my own hesitation to challenge my Professor's apparent deep affection for neurogenesis and plasticity to my fellow classmates, but there is no way they're not also feeling this frustration. Nothing in science is ever good all the time. In fact, most of the time, eventually we find out that things we think are beneficial turn out to have colossal downsides. So when is it too much when it comes to plasticity?

using a 31-gene profile to predict the occurrence of breast cancer metastasis

So I know it's been a while since I've posted, but the first month of senior year has been a little hellish. Turns out I don't know nearly enough biochemistry for molecular bio, or enough about random brain regions for my neuroscience seminar, but maybe it'll just motivate me to keep up with my genomics readings and blog posts.

This semester, I'm taking a class about the biopolitics of breast cancer. While the class itself is, by and large, one large women studies-fueled (don't even get me started...) debate, it has propelled me to look a little bit more into breast cancer in the US. I happened to stumble across an article on GenomeWeb that really sparked interest - there is now a way for patients diagnosed with breast cancer to find out the likelihood and time it would take for the cancer to metastasize, based on a 31-gene signature.

This time-to-an-event breast cancer gene panel can be seen as a totally new type of diagnostic paradigm, that essentially has the potential to alter clinical management of breast cancer. On one hand, if accurate, individuals affected by breast cancer can avoid drastic treatment options if this gene panel predicts a very low chance of metastasis. On the other, patients can seek earlier therapies if they find out there is a good chance of their cancer metastasizing. Of course, the accuracy of this method would have to be just about 100% - I can't even imagine the legal liability that would come along with this kind of technology. But the prospects of this type of test could completely revolutionize a breast cancer diagnosis. Maybe then I wouldn't have to hear about how mastectomies "objectify women" because of some silly conspiracy theory about male doctors trying to take over the world... but I digress - we all know how I feel about vassar college feminazis...

This semester, I'm taking a class about the biopolitics of breast cancer. While the class itself is, by and large, one large women studies-fueled (don't even get me started...) debate, it has propelled me to look a little bit more into breast cancer in the US. I happened to stumble across an article on GenomeWeb that really sparked interest - there is now a way for patients diagnosed with breast cancer to find out the likelihood and time it would take for the cancer to metastasize, based on a 31-gene signature.

This time-to-an-event breast cancer gene panel can be seen as a totally new type of diagnostic paradigm, that essentially has the potential to alter clinical management of breast cancer. On one hand, if accurate, individuals affected by breast cancer can avoid drastic treatment options if this gene panel predicts a very low chance of metastasis. On the other, patients can seek earlier therapies if they find out there is a good chance of their cancer metastasizing. Of course, the accuracy of this method would have to be just about 100% - I can't even imagine the legal liability that would come along with this kind of technology. But the prospects of this type of test could completely revolutionize a breast cancer diagnosis. Maybe then I wouldn't have to hear about how mastectomies "objectify women" because of some silly conspiracy theory about male doctors trying to take over the world... but I digress - we all know how I feel about vassar college feminazis...

August 12, 2010

altering immune-related gene levels in MS by targeting regulatory microRNAs

Members of the Australia and New Zealand MS Genetics Consortium (ANZgene) compared miRNA patterns in blood samples from 59 MS patients and 37 healthy controls (PLoS ONE link soon to come). Two specific microRNAs, miR-17 and miR-20a, have been found at significantly lower levels in the blood of MS patients (RR-MS, PP-MS and SP-MS) than in that of unaffected individuals. Both of these miRNAs are thought to regulate immune genes in humans by curbing the expression of genes involved in T-cell activation in the immune system. Therefore, these miRNAs could be very useful in the development of novel MS treatments.

Other than recent usage of miRNA technologies, MS research has focused a lot on HLA-loci and epigenetic factors associated with MS incidence. However, it seems as though miRNA associations could be much more helpful in understanding more about MS development and therapeutic approaches. Results from the aforementioned study suggest that miR-17 and miR-20a down-regulation is linked to enhanced mRNA levels for some of the same T-cell related genes that get over-expressed in MS patient blood samples, leading us to believe that the miRNAs contribute to MS development.

The ANZgene team concluded that:

Other than recent usage of miRNA technologies, MS research has focused a lot on HLA-loci and epigenetic factors associated with MS incidence. However, it seems as though miRNA associations could be much more helpful in understanding more about MS development and therapeutic approaches. Results from the aforementioned study suggest that miR-17 and miR-20a down-regulation is linked to enhanced mRNA levels for some of the same T-cell related genes that get over-expressed in MS patient blood samples, leading us to believe that the miRNAs contribute to MS development.

The ANZgene team concluded that:

Even if the miRNAs under-expressed in MS were not directly contributing to the immune cell signature observed in MS whole blood, the excessive T-cell activation signature seen in MS and other autoimmune diseases suggest agents which can reduce this activity may be therapeutically beneficial.Whether or not the miRNAs contribute directly to the disease, it might be possible to target these kinds of regulatory miRNAs and therefore tweak immune related gene levels in MS.

August 10, 2010

post-grad thoughts: academia vs. industry

Lately, the pressure has been on to begin thinking about "what i'm going to do with my life". I've known, probably since 9th grade, that I've had this passion for genomics/neuroscience/pharmacogenomics. I would be very happy sitting at a bench with test tubes, gels, sequencing technology - all of it. Unfortunately, word on the street is that jobs like that don't really "pay well", and aren't worthy of the next six years of grad school tuition and education. Soooo, now I find myself asking - do I want to be in academia or do I want to get into pharmacogenomics and be in an industry job?

The deciding factor for me appears to be that industry apparently tends to offer more/better financial opportunities, but academia would most likely foster more flexibility in terms of being able to explore my own research interests. And while these fields seem to be pretty divergent, it would seem as though you need the same fundamental skills - writing well, being a good scientist (broad, i know) and keeping up with current research trends.

Sometimes I wonder if in my future the two will have to be mutually exclusive. And I really wonder if I will actually be given the opportunity to choose for myself. I'm going to have to find a way to make a living somehow in research, and most likely I'll end up in whichever lets me in.

August 5, 2010

more accurate SNP identification by using population sequence data: the SNP-seq method

Researchers at the Scripps Research Institute, located in La Jolla CA, have devised a cutting-edge program to identify SNPs and sequence individuals' genotypes called SNIP-seq. This program was designed to utilize population sequence data (when a number of samples/individuals n>=20 have been sequenced across the same genomic regions) to identify SNPs. In addition, SNIP-seq assigns genotypes for each SNP to each sample.

This method has been deemed highly accurate and reduces the rate of false positives that have been caused by sequencing errors. Vikas Bansal, the first author of the paper from Genome Research (abstract), explained the motivation to develop this technology:

This new, more accurate method (false-positive rate ~2%, down from ~5%) can help us to re-sequence genomic regions known to be associated with disease, and therefore detect rare variants that might contribute to disease progression. Previous, less accurate sequencing methods that identified false positive SNPs potentially impeded disease research. SNP-seq will potentially distinguish false SNPs from real ones. This breakthrough use of population sequencing data will hopefully lead to more accurate studying of disease-causing genetic variants and viable therapeutic/pharmacogenomic targets.

This method has been deemed highly accurate and reduces the rate of false positives that have been caused by sequencing errors. Vikas Bansal, the first author of the paper from Genome Research (abstract), explained the motivation to develop this technology:

...You have a lot of tools for aligning the short reads generated by the next-gen sequencing platforms to a reference genome and you also have tools for identifying SNPs, but when you have population sequence data, you can leverage the fact that you have multiple individuals' sequences across the same genomic regions to improve both the accuracy of SNPs and the genotype calling.The researchers evaluated the accuracy of their method in a really cool way - they used sequence data from a 200kb region on chromosome 9p21 (location of genes that add to a person's risk of developing coronary artery disease and diabetes) from 48 individuals. The SNIP-seq method proved accurate for detecting variants and filtered out false SNPs. The even cooler thing is that the researchers "stumbled" across novel SNPs in this chromosomal region, which they later validated using pooled sequencing data and confirmed using Sanger sequencing.

This new, more accurate method (false-positive rate ~2%, down from ~5%) can help us to re-sequence genomic regions known to be associated with disease, and therefore detect rare variants that might contribute to disease progression. Previous, less accurate sequencing methods that identified false positive SNPs potentially impeded disease research. SNP-seq will potentially distinguish false SNPs from real ones. This breakthrough use of population sequencing data will hopefully lead to more accurate studying of disease-causing genetic variants and viable therapeutic/pharmacogenomic targets.

August 4, 2010

to publish or not to publish, that is the question...

GenomeWeb posted a pretty cool article this month about the dilemma of whether or not to publish in Science. I think it's pretty well written and makes very valid points, so instead of reiterating it, here's the link.

When I read articles assigned in class, or journals on my own, I always tend to be drawn to finding out how the researchers could potentially be displaying their conclusions to appear that there is actually more of an effect than there really is. This article adequately brings up that same question, and alludes to Daniele Fanelli, who's research supposedly determined that this 'positive bias' tends to get larger as you move away from the physical/chemical sciences and closer to the behavioral sciences along the "spectrum of science". I would just expect that to be the case though, being as chemical science is largely based on strict numbers and calculations where behavioral studies are largely based on observations and drawing (often subjective) conclusions.

They talk about "changing the system". Having journals specifically for negative results!? That just seems kind of unnecessary and weird to me. I essentially don't think this positive bias can ever be removed, but I think it's necessary to make the scientific community aware of this bias when they read newly published literature.

My own proposal? More websites like the Faculty of 1000 Biology website. Here, established professionals can read new literature and mark specific ones that they think are especially cutting edge or valuable for future research. They also take validity into consideration, and most of the people rating these articles have a pretty good eye for true results when they see 'em. It's a great help for gathering sources for a paper, or just for pointing you in the right direction for leisurely reading about new things in the field.

When I read articles assigned in class, or journals on my own, I always tend to be drawn to finding out how the researchers could potentially be displaying their conclusions to appear that there is actually more of an effect than there really is. This article adequately brings up that same question, and alludes to Daniele Fanelli, who's research supposedly determined that this 'positive bias' tends to get larger as you move away from the physical/chemical sciences and closer to the behavioral sciences along the "spectrum of science". I would just expect that to be the case though, being as chemical science is largely based on strict numbers and calculations where behavioral studies are largely based on observations and drawing (often subjective) conclusions.

They talk about "changing the system". Having journals specifically for negative results!? That just seems kind of unnecessary and weird to me. I essentially don't think this positive bias can ever be removed, but I think it's necessary to make the scientific community aware of this bias when they read newly published literature.

My own proposal? More websites like the Faculty of 1000 Biology website. Here, established professionals can read new literature and mark specific ones that they think are especially cutting edge or valuable for future research. They also take validity into consideration, and most of the people rating these articles have a pretty good eye for true results when they see 'em. It's a great help for gathering sources for a paper, or just for pointing you in the right direction for leisurely reading about new things in the field.

July 30, 2010

a single cell's protein and mRNA copy number for any given gene are uncorrelated

Okay. So let me preface this by letting you all know how excited I was by this article (must log in to Science magazine to view full article), on a 1-10 scale. I think I'm circling around an 8.

Anyway, so I've always assumed higher mRNA levels are directly correlated with increased gene expression, and therefore higher levels of the protein coded by that gene. Right?

Welp. Apparently, according to researchers at Harvard and the University of Toronto, that's not necessarily true, due to differences in how long mRNA and proteins last in cells. The study utilized a YFP (yellow fluorescent protein) and single molecule FISH which allowed the researchers to quantitatively assess levels of gene expression and mRNA molecules expressed by the YFP-tagged genes. Being that they used gram-negative E. coli cells for the purpose of this study, they controlled for the differences in bacterial gene transcripts by "providing quantitative analyses of both abundance and noise in the proteome and transcriptome on a single-cell level", says Taniguchi, et al. As Sunney Xie comments, "this provides a cautionary note for people who want to do single cell mRNA-profiling".

The main finding of this study is that a single cell's protein and mRNA copy number for any given gene are uncorrelated. As the authors wrote, the study highlights the disconnect between proteome and transcriptome analyses of a single cell. It's also important in understanding how cells coordinate the expression of proteins that work together, such as multi-subunit proteins or proteins that serve within metabolic cycles.

Anyway, so I've always assumed higher mRNA levels are directly correlated with increased gene expression, and therefore higher levels of the protein coded by that gene. Right?

Welp. Apparently, according to researchers at Harvard and the University of Toronto, that's not necessarily true, due to differences in how long mRNA and proteins last in cells. The study utilized a YFP (yellow fluorescent protein) and single molecule FISH which allowed the researchers to quantitatively assess levels of gene expression and mRNA molecules expressed by the YFP-tagged genes. Being that they used gram-negative E. coli cells for the purpose of this study, they controlled for the differences in bacterial gene transcripts by "providing quantitative analyses of both abundance and noise in the proteome and transcriptome on a single-cell level", says Taniguchi, et al. As Sunney Xie comments, "this provides a cautionary note for people who want to do single cell mRNA-profiling".

The main finding of this study is that a single cell's protein and mRNA copy number for any given gene are uncorrelated. As the authors wrote, the study highlights the disconnect between proteome and transcriptome analyses of a single cell. It's also important in understanding how cells coordinate the expression of proteins that work together, such as multi-subunit proteins or proteins that serve within metabolic cycles.

July 26, 2010

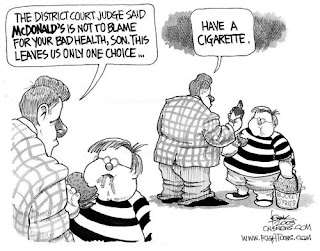

hipsters: not only do I not like you, but your transcriptomes don't either.

|

| naturopathicyoga.com |

Genome-wide quantitative transcriptional profiles taken from 1,240 subjects in the San Antonio Family Heart Study* (included ~300 current smokers) identified 323 unique genes whose expression pattern levels were significantly correated with smoking behavior! (provisional abstract) These genes have been implicated in immune response, cancer and cell death. The study has also suggested that smoking cigarettes can alter the expression of hundreds of white blood cell genes. As an article on GenomeWeb points out, "a comprehensive picture of how smoking affects the body has been difficult because cigarette smoke contains thousands of compounds that seem to act throughout the body".

With the current craze in the genomics world to understand how, and to what extent, environmental factors influence an individual's gene expression pattern, these findings are especially exciting. Smoking is considered an environmental factor, and we can see that just in this one study, it affects the expression of hundreds of genes and gene networks. It would be interesting to somehow assess how second-hand smoke exposure affects the genomes of individuals who have been exposed to second-hand smoke on a regular basis. I'm sure a study like that would push some places (coughvassarcough) to more rapid non-smoking policy. Speaking of, today Vanderbilt students found out that come Fall, their campus would be strictly non-smoking, and smoking would therefore be designated to specific locations on campus (where all the hipsters can gather and indulge). Let's get a move on it Vassar.

*Potential sample bias considering subjects of the study are involved in a Heart Study? Could gene expression profiles be different since subjects are in hypertension/obesity experiments? This study should probably be re-done with a random cohort, controlled for gender/SES/family history/age/smoking frequency, with equal numbers of smokers and non-smokers.

July 25, 2010

MS: Vascular or Neurological?

As I have mentioned previously, Multiple Sclerosis has always been defined as a neurological autoimmune disease that affects the central nervous system. Well, there is now reason to speculate that MS is actually (at least partially) induced by attenuated veins in the neck and chest that block the proper drainage of blood from the brain. Dr. Paolo Zamboni, an Italian vascular surgeon who has been accredited with this vascular theory of MS, has been advocating the usage of a "liberation procedure" in which a balloon is inserted into one of these narrowed veins and inflated, with the intent of thickening the blood passageway and ameliorating blood flow from the brain.

This idea is particularly intriguing to me, considering the study is taking place in the Jacobs Neurological Institute at Buffalo General Hospital, steps away from where I worked this Summer. We even discussed the possible ethical dilemmas associated with the study in a staff meeting last week. Although I tend to steer clear of the New York Times for science updates, this one article does a great job of explaining what is happening in Buffalo and what issues some people have with the experimentation.

Personally, I think this study is a great idea. I understand the risk behind the surgical procedure, but all the patients involved have consented, and understand the risk behind undergoing the surgery. Although the study is double-blind, and some patients will undergo a sham surgery (balloon is inserted but taken out without being inflated in the target vein), patients were notified prior to consenting that they could be undergoing a sham surgery in which they would not be receiving the potential therapy. To be honest, a lot of the research happening at the JNI is focused on how we can reduce the side-effects of the already existing MS therapies. Antigenicity of Copaxone, Rebif and Interferon-beta have been worrisome, and, Tysabri - although effective - has been associated with increased risk for PML, an incurable, untreatable and sometimes fatal brain infection. I think patients who have been diagnosed with MS and are suffering are for the most part unopposed to a potential new treatment, that most likely does not have any more risks than any other surgical procedure might have. Furthermore, the experimental phase of the study has not even begun yet. Right now, doctors are simply performing the procedures and monitoring patients to make sure that the surgery itself is not harming them. I believe the first procedures took place about a month ago, and it will be a few months until the experimental phase of the study begins.

I myself do not know if I believe MS is a vascular disease, but I do not see any harm in finding out more. Should this study be successful in targeting the narrowing of blood passageways as a direct cause or exacerbating factor of MS, it will lead to a wide array of new (and most likely less risky) therapeutic options. From a genomics point of view, it will help us identify new genes and loci that might be vital on the pharmacogenomics side of things. I do not know whether or not this is included in the study - but I do hope that after the conclusion of the study, patients who had unknowingly received the sham procedure are entitled to the actual liberation surgery if they so choose.

Now, I don't consider myself anywhere close to an MS expert, but based on what I do know, I would expect to find an association between severity of symptoms/relapses and vein narrowing. However, I think there is too much evidence to point to MS as a neurologically-driven autoimmune disease. All in all, if you couldn't tell by the frenzied state of my writing in this post, I'm pretty freakin' excited by the potential of the study.

This idea is particularly intriguing to me, considering the study is taking place in the Jacobs Neurological Institute at Buffalo General Hospital, steps away from where I worked this Summer. We even discussed the possible ethical dilemmas associated with the study in a staff meeting last week. Although I tend to steer clear of the New York Times for science updates, this one article does a great job of explaining what is happening in Buffalo and what issues some people have with the experimentation.

Personally, I think this study is a great idea. I understand the risk behind the surgical procedure, but all the patients involved have consented, and understand the risk behind undergoing the surgery. Although the study is double-blind, and some patients will undergo a sham surgery (balloon is inserted but taken out without being inflated in the target vein), patients were notified prior to consenting that they could be undergoing a sham surgery in which they would not be receiving the potential therapy. To be honest, a lot of the research happening at the JNI is focused on how we can reduce the side-effects of the already existing MS therapies. Antigenicity of Copaxone, Rebif and Interferon-beta have been worrisome, and, Tysabri - although effective - has been associated with increased risk for PML, an incurable, untreatable and sometimes fatal brain infection. I think patients who have been diagnosed with MS and are suffering are for the most part unopposed to a potential new treatment, that most likely does not have any more risks than any other surgical procedure might have. Furthermore, the experimental phase of the study has not even begun yet. Right now, doctors are simply performing the procedures and monitoring patients to make sure that the surgery itself is not harming them. I believe the first procedures took place about a month ago, and it will be a few months until the experimental phase of the study begins.

I myself do not know if I believe MS is a vascular disease, but I do not see any harm in finding out more. Should this study be successful in targeting the narrowing of blood passageways as a direct cause or exacerbating factor of MS, it will lead to a wide array of new (and most likely less risky) therapeutic options. From a genomics point of view, it will help us identify new genes and loci that might be vital on the pharmacogenomics side of things. I do not know whether or not this is included in the study - but I do hope that after the conclusion of the study, patients who had unknowingly received the sham procedure are entitled to the actual liberation surgery if they so choose.

Now, I don't consider myself anywhere close to an MS expert, but based on what I do know, I would expect to find an association between severity of symptoms/relapses and vein narrowing. However, I think there is too much evidence to point to MS as a neurologically-driven autoimmune disease. All in all, if you couldn't tell by the frenzied state of my writing in this post, I'm pretty freakin' excited by the potential of the study.

miRNA for noobs

MicroRNA is one of the coolest things ever. If you don't know what it is, do yourself a favor and at least read the Wikipedia article about it. Or take 1:23 to watch a sweet animation. I'm willing to bet miRNA and RNA interference studies hold the key to many of the ambiguities in clinical research.

summer in Buffalo 2010

As of yesterday, I completed a 7-week research internship at the Jacobs Neurological Institute in Buffalo. If you asked me what I knew about Multiple Sclerosis on June 5th, I would have told you what we had touched upon in Physiological Psychology - basically that it was an autoimmune disease that severely impacts the central nervous system. Over the past month and a half, my knowledge of the disease - and the field of clinical research in general - has been expanded by leaps and bounds.

My original assignment was to assess the recent advancements in the field, and the future directions that research should take. At the conclusion of the internship, the only material I could physically produce was a 1500-word review paper on the pharmacogenomics and pharmacogenetics of MS. My initial feeling was one of disappointment - I couldn't believe that it had taken me 5 weeks to write 1500 words. But when I realized how far I had come in my understanding of the pharmacogenomics of MS, my feelings of disappointment were mollified.

I've read - and understood (!!) - between 30 and 40 primary research papers written since the beginning of 2010 on everything from miRNA (micro RNA) assays, post-translational modification studies, GWAS (genome-wide association studies) to the use of animal models with EAE (experimental autoimmune encephalomyelitis). These are just some of the ways in which researchers have been attempting to further understand both the susceptibility and severity factors of MS in the past few years.

While there are so many interesting things I have learned - some of which I will post about at a later date - I think the most prevalent and intriguing pieces to the MS puzzle are the links we have made to the individual genome. Given that I am boarding a flight back home in a mere 5 hours, I only have the time to talk about one of my favorites for now:

For the past few years it's been widely accepted that, the HLA-DRB1 gene, encoding for the HLA class II histocompatibility antigen DRB1-9 beta chain protein, is associated with MS susceptibility. Just this year, researchers have furthered this belief by proving that the presence of oligoclonal bands in the CSF is significantly associated with the presence of the HLA-DRB1 genotype in a West Australian cohort (Wu, et al. 2010). This was the first study ever to show that HLA-DRB1 allele interactions and dose-effects influence the frequency of oligoclonal bands**. Wu, et al. went a step further to target HLA-DRB1*1501 as not only a strong determinant of MS risk, but also as a predictor of MS severity as measured by the MSSS (MS Severity Score). Furthermore, the HLA-DRB1*1201 allele was linked to less severe cases of the disease. Another study (Zivkovix, et al. 2009) concluded that, specifically, the tag SNP for HLA-DRB1*1501 (rs313588) is present in significantly higher frequencies in MS patients compared to control subjects.

Before heading off to sleep in anticipation of my long-awaited departure from Buffalo, I think it's worth it to mention another study which ties into the above discoveries. In 2008, Hoffman et al. found that the HLA-DRB*1401 and HLA-DRB1*0408 alleles are strongly associated with the development of antibodies against interferon-beta MS therapy. I wonder if this means that they would both be linked to more severe cases of MS? Would that mean that the HLA-DRB*1501 allele also has more antigenic properties in response to IFN-beta? Cool stuff. For a genomics nerd at least...

|

| **http://library.med.utah.edu/kw/ms/mml/ms_oligoclonal.html The presence of OCBs in the CSF usually indicates immunoglobulin production in the CNS, suggesting a case of MS in the patient (as seen on the right) |

Subscribe to:

Comments (Atom)